Woman who spent years scrubbing explicit video from internet urges tech firms to make it easier to remove

Tech firms say their reporting mechanisms are robust, but new report says child porn not removed fast enough

She was only 14 when she was groomed to have virtual sex with an older man she met on social media.

She had no idea he was recording it and that she would spend years suffering the fallout online and in her real life.

"It [the video] is like the head of the hydra. You cut one off, and then there's three more," said the woman, who is from a large Canadian city and whose identity is being concealed out of concern for her safety.

She recalls what it was like, as a frightened teenage girl, to realize the video of her was publicly available.

"I honestly thought every single day was going to be either the last day I'm alive or the last day I have any social life at all because it felt like I was on the precipice of my life — exploding every day," she said.

Child protection advocates say this woman is just one of the thousands whose illegal, underage graphic videos and images are being shared online around the world — and who say they are finding it hard to report so-called child sexual abuse material (CSAM) and have it removed.

Distribution underreported

More than 1,400 children were lured using a computer last year, according to a Statistics Canada report of police-reported cybercrime. That compares to 850 in 2015.

There were 4,174 cases of making or distributing child porn reported to police in 2019, compared to 850 in 2015.

However, much like sexual assault, only a small percentage of victims ever go to police. Many try to manage the situation alone. Cybertip.ca, a national tip line for reporting underage sexual exploitation, processed 28,556 cases in 2019.

Online predators use encryption, cloaking technology and the dark web to create and share CSAM, commonly known as child pornography.

The increased use of smartphone cameras, social media and cloud storage has allowed CSAM to multiply and recirculate throughout the internet, on platforms as diverse as Facebook, Instagram, Twitter and Google, as well as legal adult porn sites.

It's how the young woman's nightmare began. CBC has agreed to remove all identifying information about the woman as a condition of her agreeing to the interview and to prevent further harassment.

How it started

She was posting selfie GIFs of herself on social media and caught the attention of a man who said he lived nearby and told her he was in his 20s.

They started communicating. She was giddy that an older man was interested in her and had dreams of meeting him in person one day.

"I had Skype downloaded on my phone so we can text during the day, and he would tell me how pretty I was and all this stuff and how I was so special, basically grooming me into believing that we had something so special and so breathtaking," she recalled last week in an interview with CBC News.

"And then he brought up the topic of doing Skype sex, basically, for him and with him. And I remember even being hesitant. But the driving force for me to do this was, this boy likes me and the only way he will continue to like me is if I do this for him."

So, late one night, she agreed.

"I did whatever he asked. But what I didn't know was that he had downloaded recording software ... before all of this started. And this was a deliberate plan."

Shortly after, the professed boyfriend disappeared. She never knew his real name or age or where he lived.

Meanwhile, links to the video started popping up on her social media accounts.

"I got messages from people in India telling me I was in a WhatsApp thread of girls," she said. "I have got messages from Poland telling me, 'You're on this website' … I was 14, and I started getting links of me on Pornhub and … all these websites that I didn't even know existed.

"It's like a violation on every front. I could not open my computer without having to deal with this and see it and experience it."

In the years since the video was posted publicly, she's been stalked, threatened and harassed, she said. People have posted photos of her home, shared her address, the name of her school and even the classes she's taking.

"When I got into university, it just got worse," she said. "I feel like they found out I turned 18, and the gates of hell just opened."

Coping alone

So, every day for the last few years, she has spent hours searching her name to locate the video online, then contacting the platforms on which it was posted and asking to have it removed.

"At first, I started off nicely," she said. "I'm like, 'Hey, can you please take this down? This wasn't consensual.' And they're like, 'We have no proof of that or that it's you, so sorry about that.' I would tell them that I was underage … And they would again say, 'Oh, we have no proof of that.'"

More often, she would get an automated email with a link at the bottom, sending her to a reporting form.

Frustrated, she found a template online for a standard cease and desist order and sent emails to various platforms threatening legal action.

"You're 16 years old, and you're pretending to be a lawyer so you can get your non-consensual child pornography taken off of Pornhub, and you're reading the comments, and all these disgusting people are commenting about how young you look and how great the video is," she said.

"Sometimes, it worked; sometimes, it didn't.… I would say none of [the platforms] were helpful."

Pornhub says it reviews flagged videos

CBC News was unable to ask the platforms about this specific case to protect the woman's identity. She said she no longer has documentation showing how each platform responded to her requests to remove the video.

However, a spokesperson for Montreal-based Pornhub said when a video is flagged, it is removed immediately for review by a team of moderators. It's either deleted entirely or, if it does not violate Pornhub's terms of service, it is placed back on the platform.

Pornhub later clarified that if a video is flagged as non-consensual, "it is removed immediately and never placed back on the platform ... no questions asked."

The woman's lonely crusade finally ended in March 2020.

After receiving a threatening video call from a stranger on social media, she went to police. It was the first time she told someone the entire story.

"I was basically sob-crying the entire time," she said.

She said she was relieved when the female police officer believed her.

The officer connected her to the Winnipeg-based Canadian Centre for Child Protection, a charitable organization formed in 1985 as Child Find Manitoba following the abduction and murder of 13-year-old Candace Derksen. It has operated Cybertip.ca since 2002.

WATCH | Signy Arnason of the Canadian Centre for Child Protection on shifting onus for policing content from adolescents to content hosts:

Difficult to report

After hearing the experiences of the woman who spoke with CBC and others, the centre decided to analyze just how difficult it is for victims and the general public to report suspected or known cases of child sexual abuse material.

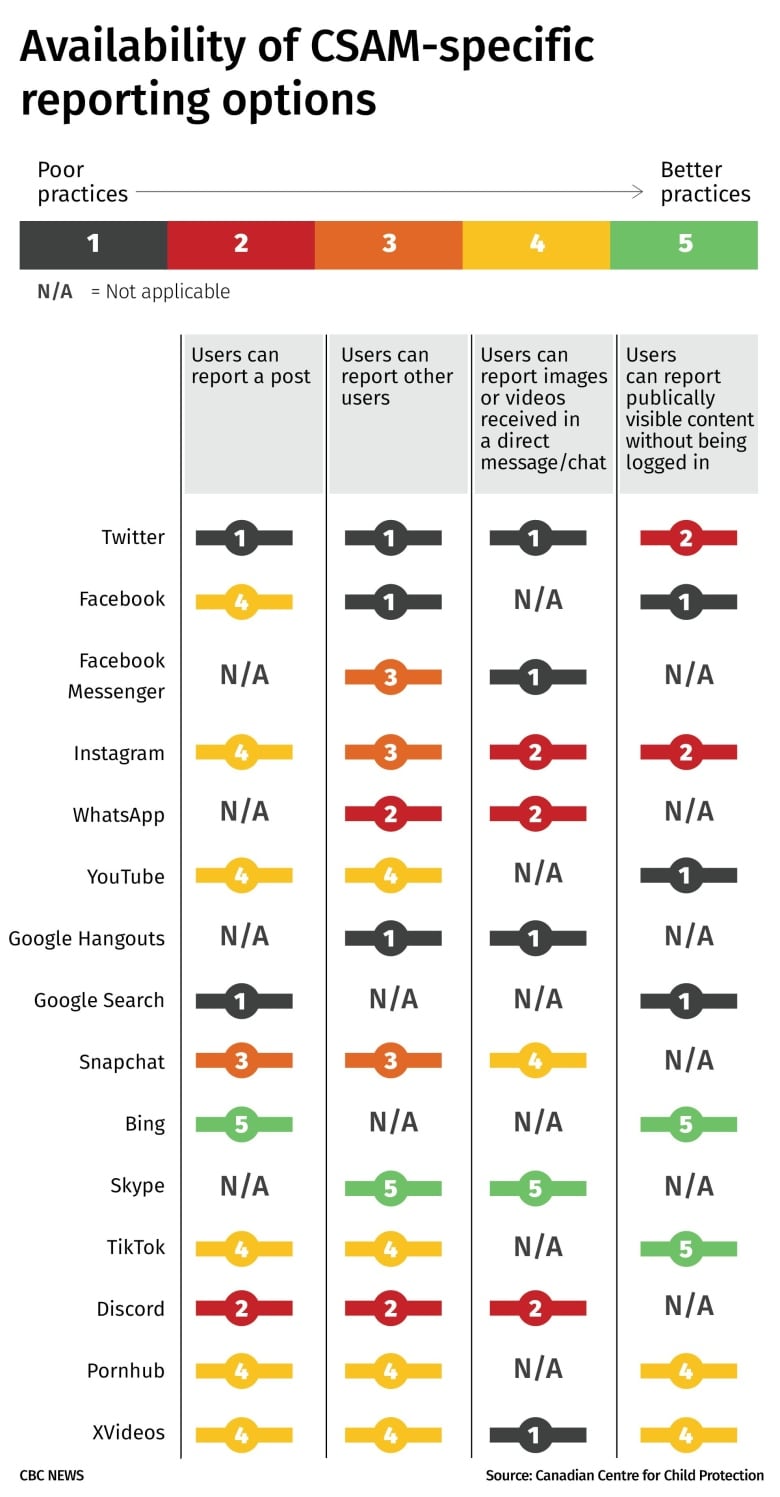

In a report released Tuesday, the centre analyzed 15 different platforms between Sept.16 and Sept. 23 of this year, including Facebook, YouTube, Twitter and TikTok, as well as adult sites such as Pornhub.

At the time of the review, the centre said none of the platforms — with the exception of Microsoft's Bing search engine — had content-reporting options specific to CSAM directly in posts, within direct messages or when reporting a user.

Instead, they used non-specific or ambiguous language and were often difficult to find. Analysts sometimes had to wade through fine print and different links to get to a place where they could file a report. The report urges platforms to make such reporting mechanisms simpler and easier to find.

The centre maintains that is important because without a reporting category specific to CSAM, which is a Criminal Code offence, platforms have no way of quickly prioritizing user reports within their content moderation process. Swift removal is crucial to curbing spread of such content.

"It should be a very simple thing to report," said Signy Arnason, the centre's assistant executive director.

"There's a lot of pressure being placed on either the adolescent population or young adults when they're trying to take this on and manage it themselves. And we're abysmally failing them, and the companies have to step up and take some significant responsibility for that."

The centre is making five recommendations to clarify and streamline the CSAM reporting process for platforms that allow user-generated content to be uploaded onto their services:

-

Create reporting categories specific to child sexual abuse material.

-

Include CSAM-specific reporting options in easy-to-locate reporting menus.

-

Ensure reporting functions are consistent across the entire platform.

-

Allow reporting of content that is visible without having to create or log into an account.

-

Eliminate mandatory personal information fields in content reporting forms.

Platforms respond

The centre provided the 15 online platforms with their specific analysis. CBC News reached out to all of them for a response; only XVideos did not provide a written statement.

All of the companies that replied said they are committed to fighting CSAM online and have taken steps to identify and remove it when flagged.

Several disputed the centre's analysis, saying they do have mechanisms for reporting child exploitation — it just might be called something other than "child sexual abuse material."

A Google spokesperson said the centre's report seems to be missing several important reporting mechanisms on its platforms. For example, on YouTube, reporting a user is not restricted to desktop; you can also report a user on mobile devices.

Meanwhile, on Google Hangouts, users can use the child endangerment form to report grooming a child for sexual exploitation, sextorting a child or sex trafficking a child.

"While most of the content we report to authorities is first discovered through our automated processes, user and third-party reporting is an important part of identifying potential CSAM," Google Canada spokesperson Molly Morgan said.

"As with all of our efforts to combat CSAM, we work with experts — including the Canadian Centre for Child Protection — to identify ways to improve our systems and reporting practices."

Reporting transparent, accessible, says Twitter

The report said it is "extremely difficult" to report CSAM content on Twitter.

Michele Austin, the head of government and public policy for Twitter Canada, said anyone can report content that appears to violate Twitter's rules on its web form, and the company will also investigate other reports.

Links are removed from the site without notice and reported to the U.S. National Center for Missing and Exploited Children, Austin said in a statement. "Our goal is to make reporting more easily understood, accessible and transparent to the general public," she said.

The report said there are no CSAM-specific reporting options on Facebook and its associated platforms — Messenger, Instagram and WhatsApp, which uses end-to-end encryption, leading critics to say reporting functions are minimal.

However, 99.3 per cent of content identified as possible child nudity and sexual exploitation of children was flagged by PhotoDNA or VideoDNA technology and artificial intelligence before users even reported it, Antigone Davis, Facebook's global head of safety, said in an email to CBC News.

"We share the Canadian Centre for Child Protection's view that child sexual abuse material is abhorrent," Davis said.

"We have tested many of the recommendations in this report and have found that they are less effective than other forms of reporting and prevention of this material in the first place. We'd love to work more closely with the Canadian Centre for Child Protection, and other child safety experts globally, to share our learnings."

Facebook said its reporting mechanisms are created so people with different levels of literacy and digital education can use them and so investigators can see offending content and take action quickly. The platform says using a tag such as "child sexual abuse material" may result in people misusing it to send reports that don't belong there, slowing down the takedown process.

Other companies criticized in the report also sent responses to CBC, with some disputing the accuracy of the report's findings.

- Read the responses from other technology companies.

'We are facing an epidemic'

There are currently no laws in Canada specifically on removing content from web platforms, but even with legislation in place, experts say, it's a jurisdictional challenge to hold someone accountable, depending on where the company's headquarters and servers are located.

Meanwhile, the centre is also urging online platforms and tech companies to make it a priority to develop and use programs for finding child pornography images and video on their sites.

"You're looking at a population that spends 24/7 thinking about ways that they can victimize and harm children," Arnason said of those posting CSAM content. "So we have to, equally on our side, be throwing the resources at trying to address what is happening and how this population is communicating with each other and significantly hurting kids."

In 2017, the centre developed an online web crawler called Project Arachnid. It scans the open and dark web for known images of child sexual abuse and issues notices to companies to remove them.

But even that cannot keep up.

"We have a backlog of 20 million suspect images of child sexual abuse material," Arnason said.

"We are facing an epidemic. We've placed all sorts of protections around children in the offline world, and we've neglected to do so online. And now, children are paying for that in a very significant way, in a traumatic way. So this has got to change."

'They don't want to do content moderation'

Some of that work is being done by Hany Farid, a professor who specializes in the analysis of digital images at the University of California, Berkeley. He co-developed a Microsoft program called PhotoDNA, which creates a digital signature of an image and compares it to others to find copies.

But he said buy-in has been slow because the removal of child sexual abuse images has been mostly left to the discretion of the industry.

"Content moderation is never made easy, and there's a really simple reason for it — because they don't want to do content moderation," Farid said.

"All social media sites profit by user-generated content. Taking down and having to take down and having to review material — it's bad for business ... It creates a liability. And so, they don't want to do it."

WATCH | Hany Farid says complaints about underage user-generated videos should have comparable urgency to customer complaints about defective products:

Farid said there is always a way to report material, but it's often difficult to find.

Compare that to clear and simple mechanisms for reporting copyright infringement — something for which companies can be held legally liable.

"Why is there not a big fat button that says, 'I think a child is at risk.' Boom. Click it," Farid said.

"The internet is reflecting the physical world. Our children are genuinely in danger. I don't understand why we are not absolutely up in arms, storming the fences at Facebook and TikTok and Twitter and YouTube, demanding that they protect our children."

Accountability and hope

The woman who shared her story with CBC said she did so partly to hold online platforms more accountable for the CSAM content posted on their sites.

"If you see that there's children on your website and you do nothing, you are literally just complacent with what's going on. You are OK with child pornography being exploited on your website," she said.

She would like online platforms to compel those posting material to provide proof of identification and verification that the image or video is consensual and doesn't involve a minor.

She wishes she had reported her own case to police sooner and is relieved Project Arachnid is now searching for her video across the internet so she doesn't have to.

"I didn't understand how much energy I was putting into constantly maintaining all of this, trying to constantly deal with it," she said.

The most important reason for speaking up, she said, is that she wants to give other young people hope that they don't have to bear this burden alone and it's not their fault.

"I used to say to myself all the time, 'How could you be so stupid?'" she said. "But you're a child. And all these people who do these things know exactly what to say."

Can you prevent images from being recorded?

The vast majority of CSAM images and videos begin as consensual, such as live streaming or texting with a boyfriend or girlfriend.

It's always safe to assume that once an image is shared with another party, it could end up on the internet or circulating among other private users, say child protection advocates.

Anyone producing and sharing intimate images or videos of themselves should seriously consider the risks involved. A trusted intimate partner can become a disaffected ex-partner.

How can you prevent CSAM from circulating?

The reality is, once the images are published on the internet, it is difficult to ever have them removed completely, say experts. Any number of users can save copies and re-publish them at a later date.

Live streaming is of particular concern because it provides users with a false sense of security. In practice, many images spread on the internet because some viewers record live streams of explicit material.

For help contact: protectchildren.ca/ or Cybertip.ca